Algorithmic systems have been a part of our daily lives for quite some time. They make decisions for and about us by filtering job applications, delivering diagnoses regarding our health or assessing creditworthiness. It is therefore important for us to discuss how the use of this technology can present us all with more opportunities than it does risks. We believe the design of algorithmic systems must therefore follow certain rules. The Algo.Rules are our proposed set of principles. Our goal is to see the Algo.Rules applied in all relevant algorithmic systems.

Those of us involved with the “Ethics of Algorithms” project at the Bertelsmann Stiftung initiated the process for developing the Algo.Rules. We want to contribute to the design of algorithmic sytems that result in a more inclusive society. Social worthiness – not technical feasibility – should be the guiding principle here. One of the overriding objectives of the Stiftung’s work is to ensure that digital transformation serve the needs of society. We aim to strengthen the voice of civil society, build bridges between disciplines, foster collaboration between theory and practice, and take a proactive approach to solving problems. We believe that developing rules for the design of algorithmic systems is one of many promising strategies which, in combination with each other, can help ensure that algorithms serve the common good. The independent think tank iRights.Lab has been commissioned to manage the development process. iRights.Lab has several years of experience with taking a transdisciplinary approach to developing complex systems and structures with a diverse network and making these systems relevant for practical use. Together, we aim to drive the public debate further and make a positive contribution to society in this field.

The Algo.Rules were created in an interdisciplinary, multisectoral and open process. We work in an interdisciplinary manner because the effects of algorithmic systems can only be understood through the convergence of different perspectives. We incorporate a variety of perspectives because actors from academia, civil society, politics and the business sector should engage in more extensive dialogue with one another. We have also chosen to pursue a fundamentally open approach, because the future of our digitalized society concerns all of us.

The process was launched at a workshop in May 2018. The theoretical groundwork for the Algo.Rules was laid in the context of two studies (on success factors for codes of professional ethics, and on the strengths and weaknesses of existing compendia of quality criteria for the use of algorithms), as well as through the consideration of numerous other sets of principles for algorithmic systems. In parallel, we consulted with 40 experts from the political, business, civil society and academic sectors over the course of the summer, asking them for intensive feedback. A further workshop in the autumn of 2018 addressed issues related to the implementation of the Algo.Rules. Finally, at the end of that year, the general public was invited to take part and contribute ideas through an online participation process. After evaluating this process, the Algo.Rules were launched in March 2019 at a press conference.

Subsequently, we worked on specifying the Algo.Rules for two focus groups: developers and executives. For this purpose, two workshops and numerous consultations with experts from the field took place during 2019. The resulting guidebook, published in June 2020, provides information on how each of the Algo.Rules can be implemented in practice. An impulse paper describes the role of executives in this process.

The project Algo.Rules is part of a group of initiatives that aim to promote the design of algorithmic systems for the common good. To start an exchange of ideas, we organized a workshop with experts from all over Europe.

In order to put Algo.Rules into practice beyond that, we participated in the development of an AI-Ethics-Label. As part of the AI Ethics Impact Group, we developed a proposal to help put general AI ethics principles into practice. The label makes AI ethics measurable and operational. We published the corresponding working paper in April 2020.

Since then we have been preparing further practical aids for the public sector. Many algorithmic systems which are used in the public sector can have a strong impact on the lives of individuals and society. By August 2020, we want to show how the Algo.Rules can provide orientation in the planning, development and use of algorithmic systems.

However, the process will not end there. We call on interested parties to work with us in developing the Algo.Rules further. We invite others to borrow, adapt and expand them, and above all to find ways to implement the rules in a real-world context. The Algo.Rules are and remain a dynamic process.

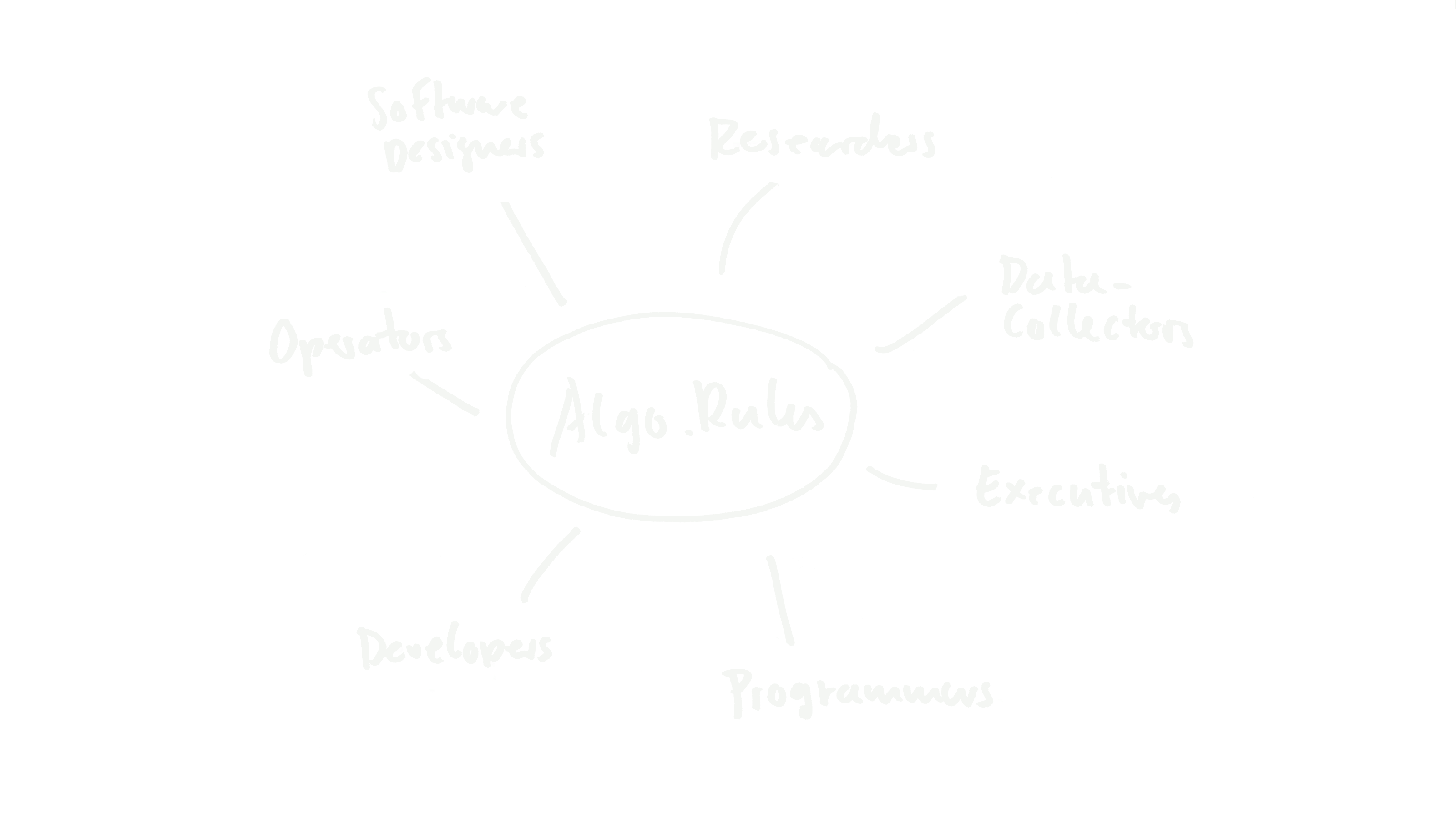

A professional ethic for programmers would not be enough, as they are not the only ones who play a crucial role in the process of shaping algorithmic systems. Many other professional groups, from company executives to the users themselves, are just as relevant. We do not want to look simply at the code of a program. Rather, we want to examine how the application is socially embedded, who it will affect and how it is implemented. The Algo.Rules are oriented toward the process of algorithmic systems. This is one of the strengths of our approach: that it addresses multiple target groups together. The target groups relevant in this regard are described in greater detail both here and in the preamble.

The Algo.Rules do not claim to be applied to all algorithmic systems. They apply only to those that have a direct or indirect, but always significant, impact on people’s lives or on society. For example, this includes software used to filter job applications, make recommendations to the police or to courts, or make decisions in disease-diagnosis settings. Thus, our approach is not about establishing design rules for all algorithmic systems but only for those that are relevant in this way. A recent assessment of potential impact on social inclusion, carried out by Ben Wagner and Kilian Vieth on behalf of the Bertelsmann Stiftung, offers an example of criteria that could guide this kind of relevance evaluation. Among other factors, this depends on who is implementing the algorithmic system, whether it is making decisions about people, what kinds of larger processes and in what area the algorithmic system is embedded in, and what consequences the system’s decisions might have for individuals’ lives or within society more broadly. In this regard, we classify systems at different levels: The stronger the algorithmic system’s potential influence on people’s lives or on society, the more important it is that the Algo.Rules be followed, and the more stringently the system should be reviewed.

We have looked closely at sets of principles and criteria that have previously been published or are currently in the development phase. Most of these were created in the USA. In a previous study, we analyzed three of these in greater detail, including the “Principles for Accountable Algorithms and a Social Impact Statement for Algorithms” produced by the FAT/ML Conference, the Future of Life Institute’s “Asilomar AI Principles,” and the “Principles for Algorithmic Transparency and Accountability” published by the ACM U.S. Public Policy Council. Our work additionally incorporated findings derived from analyses of additional compendia and from exchanges with numerous other projects. The AI Ethics Guidelines Global Inventory provides a good overview of all initiatives.

The Algo.Rules represent a complement to and a further development of existing initiatives. They differ from other compendia in two respects: First, they are not oriented solely toward programmers. Rather, they address all persons who are involved in the development and application of algorithmic systems. Second, they include formal design rules, while eschewing reference to moral norms. To a certain degree, this makes them universal.

The Algo.Rules emerge from a European cultural context. However, they have also been influenced by international discussions regarding the ethics and value-oriented design of algorithmic decision-making systems and applications. The version presented here has thus far been drafted primarily by actors active in Germany. However, they are oriented toward a public beyond Germany, and are thus being published in other languages as well. As the Algo.Rules do not contain moral norms, they are to a certain degree universal, and applicable within a variety of cultural contexts.

Aside from the effort to address a diverse and wide-ranging target group, the challenge facing the Algo.Rules rests in their actual implementation. Our analysis has shown that many of the other existing compendia of criteria have failed because their drafters failed to focus specifically on the practicalities of implementation, or because their implementation strategies never bore fruit. This is precisely why we develop practical support resources. The first step is specifying the Algo.Rules for the target groups of developers and executives. We are currently working on the application of the rules in the public sector. For this purpose, we are developing a handbook for public institutions to implement the Algo.Rules in the planning, development, procurement and use of algorithmic systems.

The Algo.Rules are an offer to the public and a possible starting point for many relevant processes. The guide for developers and the impulse paper for executives show what the Algo.Rules mean for specific target groups. Important steps have thus already been taken. The next and most important step is the concrete implementation. Here we focus on the public sector. Whether it is predictive policing, the automatic processing of social welfare applications or the sorting of unemployed people – some of the particularly hotly debated algorithmic systems come from the public sector. Systems that are used there tend to have a major impact on the lives of individuals and on society. Therefore, it is particularly important to create a basis for ethical considerations, for the implementation and enforcement of legal frameworks in the public sector through the use of the Algo.Rules. To this end, we analyze various case studies as well as the process of public planning, development and use of algorithmic systems. We consult and interview experts from the public sector. The aim is to make the Algo.Rules practically applicable and to develop proposals for implementation measures.

The Algo.Rules remain an invitation: Anyone who wants to help us create more specific versions of the Algo.Rules, or who has an idea regarding applications that could be used to test the implementation of the rules, should reach out to us using our contact form.

![[Translate to English:] Kompetenz aufbauen](/fileadmin/files/alg/01.jpg)

![[Translate to English:] Verantwortung definieren](/fileadmin/files/alg/02.jpg)

![[Translate to English:] Ziele und erwartete Wirkung dokumentieren](/fileadmin/files/alg/03.jpg)

![[Translate to English:] Sicherheit gewährleisten](/fileadmin/files/alg/04.jpg)

![[Translate to English:] Kennzeichnung durchführen](/fileadmin/files/alg/05.jpg)

![[Translate to English:] Nachvollziehbarkeit sicherstellen](/fileadmin/files/alg/06.jpg)

![[Translate to English:] Beherrschbarkeit absichern](/fileadmin/files/alg/07.jpg)

![[Translate to English:] Wirkung überprüfen](/fileadmin/files/alg/08.jpg)

![[Translate to English:] Beschwerden ermöglichen](/fileadmin/files/alg/09.jpg)

![[Translate to English:] Rules3-03](/fileadmin/files/alg/Rules3-03.png)

![[Translate to English:] KI_label-01](/fileadmin/files/alg/KI_label-01.png)